Erik Strandness on the promise and perils of AI following The Big Conversation between Lord Martin Rees and Dr John Wyatt

In The Hitchhikers Guide to the Galaxy, the main characters, Arthur Dent and Ford Prefect, are rescued from deep space by the Heart of Gold, a spaceship equipped with revolutionary technology developed by the Sirius Cybernetics Corporation which incorporated GPP or Genuine People Personality into all the mechanical accessories of the ship such that each one exhibits a particular human temperament. Marvin the paranoid android, a GPP prototype, described it to Ford and Arthur as, “Absolutely ghastly… All the doors in this spaceship have a cheerful and sunny disposition. It is their pleasure to open for you, and their satisfaction to close again with the knowledge of a job well done.” As it turned out, making artificial intelligence (AI) more human-like also made it more irritating. Once again Marvin opines, “You watch this door, it’s about to open again. I can tell by the intolerable air of smugness it suddenly generates.” It appears that the only thing worse than a smart robot is a smart-ass robot.

Is it possible that the AI promise of singing in perfect harmony will be replaced by the reality of technological personality conflicts? Do we already perceive an “intolerable air of smugness” in Alexa and Siri? AI personality disorders, while amusing, are the least of our problems because AI is threatening to redefine what it means to be human.

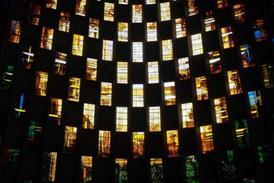

Artificial Intelligence (AI) is progressing at a rapid pace greatly facilitating our ability to generate, store, and analyze information, but its undisputed computational competency is now being overshadowed by its dubious power to transform us. Is Artificial Intelligence a friend or foe of human flourishing? Astronomer Royal Lord Martin Rees author of ‘On The Future’ and Christian bioethicist Dr John Wyatt author of ‘The Robot Will See You Now’, discussed these questions alongside clips from interviews with robotics experts Nigel Crook and David Levy.

Computerizing Humans

One of the dangers of being overly optimistic about the ability of AI to approximate human consciousness is that over time we run the risk of inappropriately anthropomorphizing it, a phenomenon called the Eliza Effect defined by Wikipedia as “the susceptibility of people to read far more understanding than is warranted into strings of symbols—especially words—strung together by computers.” If robots are perceived to be sentient, then they potentially have rights. We already have lawyers filing lawsuits on behalf of chimps and dolphins for rights violations but now we are potentially putting robot developers on the hook for labor abuses because they force their creations to compute without half hour lunch breaks. How long will it be before Alexa gets legal representation?

The flip side of the danger of anthropomorphizing computers is computerizing humans. When humans are reduced to meat computers their worth is calculated by their ability to acquire and process data. Sadly, awe and reverence are then deleted because they use up too much memory and adversely affect the systems operating speed. When the human mind is reduced to a mother board, the world is deprived of childlike wonder. In addition, those of us with aging hardware will be discarded because as we all know it is cheaper to throw away obsolete computers than spend tech time making sure they are compatible with the newest software.

Hair of the Dog that Bit Us

One of the most concerning problems related to AI and robotics is the gradual displacement of humans from the workforce. We were created to be Garden tenders so when you take away our rakes and hoes, we spend less time admiring God’s creation and more time contemplating all the things we could do if were like gods.

Robots, however, are no longer just being employed to perform tedious manual labor but are now being utilized as human companions and caretakers. AI has moved on from assisting with diagnostics to becoming a therapy unto itself. Rees believes this is a very real problem because it inappropriately replaces human compassion with algorithmic empathy.

Ironically, the technology that has complicated our lives and made us more susceptible to anxiety and depression has become our healer. Is it possible to be comforted by the very machines that caused our depression? Is the technological hair of the dog that bit us appropriate therapy for a mental health crisis?

Read more:

AI won’t overthrow humanity but it could radically undermine it

The Robot Race - Part 1: Could AI ever replace humanity?

ChatGPT, AI and the future - John Wyatt

AI and transhumanism

Kitty Litter Therapy

Pets are increasingly being viewed as therapy but what about robots? I think it makes sense for animals to be therapeutic because if you believe that God spoke the world into creation then animals are some of His most beautiful words and spending time with them is like being bathed in a soothing divine rhetoric, but is it possible for a robot to also be an Aesculapian aide?

The answer is no. Pets are therapeutic not only because they provide companionship but because they fill our need to care for others. As Albert Schweitzer said, “I don’t know what your destiny will be, but one thing I know: the only ones among you who will be totally happy are those who will have sought and found how to serve. The ability to serve others, furry or not, is absolutely essential to healing. Robots need nothing from us and while they may keep us company, they can’t fill our need to sacrificially serve another. While cleaning kitty litter may seem inconvenient, it is therapeutic.

X’s and O’s

One of the excuses offered for the utilization of robot caretakers is that there is a shortage of human caretakers. Wyatt pushes back against this narrative.

“Of course it is true that there is an acute shortage of caretakers in our community and across many western countries but the reason for that is that their terms and conditions, their pay, their status, the way they’re treated, is that they’re regarded as absolutely the bottom of the rung…The challenge we’ve got is to reimagine the human caring role as something to be regarded as a high status, dignified, well-trained, well-paid job.”

I worked as a nurse’s assistant. It is an emotionally and physically taxing job which consists of bathing, feeding, and cleaning up after people who no longer have the capacity to care for themselves. I consider it to be one of the most rewarding jobs I have ever had, but as Wyatt said, it is low paying and viewed as the last link in the health care chain. Sadly, society doesn’t want to pay for caretakers, nurse assistants, or mental health counselors even though the population is aging, and our young people are in the middle of a profound meaning crisis. We need to place a higher value on these dedicated workers because the answer isn’t a technically adept mechanical laborer but a compassionate caretaker. A robot is only capable of analyzing the ones and zeroes of health metrics, but real therapy is found in the X’s and O’s of human empathy.

Life, Don’t Talk to Me about Life!

Being present to another human being doesn’t mean occupying a similar space but opening oneself up to the breadth of human experience by reflecting on a shared journey. A robot has no journey to share. AI and robotics expert Nigel Crook put it this way.

“The problem for me is that robot would have to experience so much of life to be able to truly empathize with that individual, that makes it beyond reach as far as I’m concerned at the moment. You could have a monitoring system with simple interaction with an elderly person which keeps them engaged, perhaps helps them to remember their past, shows them photographs of the past and enables them to sort of keep that memory alive but you would also need that human-human interaction.” (Nigel Crook)

Senior citizens tend to spend a lot of time reminiscing and trying to put their lives in perspective by exploring the past. AI will never understand the scars acquired from being bullied as a child, enduring a painful divorce, or grieving the loss of a loved one. Hearing, a robot will never truly hear. One of the ways we know someone cares about us is through their body language, intonation, and demeanor, but a robot friend will always look like they are just going through the algorithmic motions. As Marvin the paranoid android so succinctly put it in the Hitchhiker’s Guide, “life, don’t talk to me about life.”

Love by the Numbers

Caretaking robots is just the beginning, we are also considering the possibility of robots as sex therapy. David Levy, AI expert, described the situation this way.

“I see robots as being the answer to the prayers of all those millions of people all over the world who are lonely because they have no one to love and no one who loves them and for these people…they have a huge void in their lives and this void will be filled when there are very human like robots around with whom they can form emotional attachments, have sexual relations, and even marry them…I believe that robots are really the only answer.” (David Levy)

It used to be that couples entered into counseling because their love had become too mechanical, but in Levy’s world they may have been on the right track all along because love by the numbers turns out to be just as good as being twitterpated. Interestingly, on the internet, you need to prove that you are not a robot before entering into a relationship with a particular person or company, yet it appears that in the brave new world to come it will be preferable to be an automaton.

Get access to exclusive bonus content & updates: register & sign up to the Premier Unbelievable? newsletter!

Transacting Love

The problem with Levy’s thesis is that he confuses sex and love.

“A lot of people ask the question, why is it better to be in love with or have sex with a robot than a human. To my mind that’s the wrong question, I think the real question is it better to have love and sex with robot or to have no love and sex at all?” (David Levy)

While it is true that a robot can be a physical gratification machine, the bigger question is, will it still need me, will it still feed me when I’m 64? It may satisfy our healthy sexual desires, but will it care for us when we’re dying of cancer? Wyatt, commenting on the work of psychiatrist and sociologist Sherry Turkle, pointed out that when we start loving robots, we end up redefining love. Robot love reduces love to a transaction between the consumer and the consumed where the motivation is the nuts and bolts of gratification and not the commitment to flesh and blood through richer and poorer. Love becomes a one-way street, a need to be met, and not a love to be requited. While adults can navigate this tension, young children cannot, and exposure to this deconstructed type of love will have profound consequences for our young people.

Conclusion

Both Rees and Wyatt are concerned about the impact that advances in AI and robotics will have on the future of mankind. Rees is concerned that it will result in the loss of our ability to apprehend wonder and mystery, key components of what it means to be human. Wyatt worries that our current “low tech human enhancement” is just the tip of an iceberg we will continue to minimize until we hit it head on and watch helplessly as our image bearing slowly drowns.

“I suspect that the drive for human enhancement is going to be massive on this planet… In many ways what we are seeing already is a kind of low-tech human enhancement. The idea of cosmetic surgery, gender changing surgery, recreational pharmaceuticals, this is all low-tech transhumanism. It shows that there is an inexhaustible appetite for us to improve our bodies. I suspect as the technology advances there is going to be more and more demand for sophisticated technologies to improve our bodies.” (Wyatt)

Watch the full episode of the Big Conversation here.